Archive

More Big Data: New York State building footprints online

The New York State GIS Program Office (NYSGPO) announced a short while back the release of an amazing web service that hosts millions building footprints across 30 counties made available by the New York State Energy Research and Development Authority (NYSERDA). NYSERDA has worked closely with Columbia University’s Center for International Earth Science Information Network (CIESIN) to generate these building footprints. They recently incorporated the Microsoft version 2 building footprints as well. This service also uses data made available by local governments in the state as part of their own funded photogrammetric and planimetric mapping missions.

We have written extensively about CIESIN in this blog and in our book: CIESIN is one of our very favorite organizations in terms of useful data and visualizations. I know many of the fine researchers there personally and have enormous respect for them. For more information on the program that made the building footprints available, see: http://fidss.ciesin.columbia.edu/home and to directly download the footprints by county visit http://fidss.ciesin.columbia.edu/building_data_adaptation. Note that the work is in progress; as of this writing, not all counties are finished yet, but keep checking the links in this essay.

The NYSGPO created a Map Service and a Feature Service so you can load these building footprints directly into a GIS such as ArcGIS Pro and ArcGIS Online. Each footprint contains attributes including the source, date, 100-year and 500-year flood impact and county building footprint download links. The site includes the statement that “NYS ITS is not responsible for the data quality that feed the services, users should contact the sources of the data with questions or comments.”

- Feature Service – https://gisservices.its.ny.gov/arcgis/rest/services/BuildingFootprints/FeatureServer

- Map Service – https://gisservices.its.ny.gov/arcgis/rest/services/BuildingFootprints/MapServer

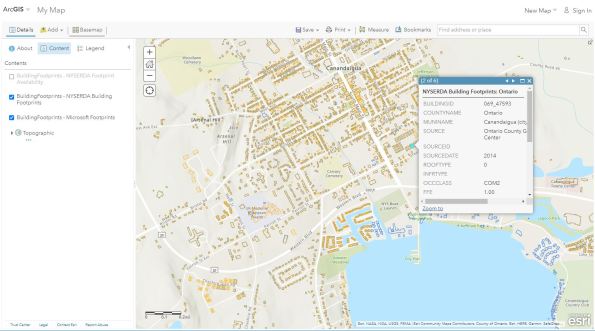

My test of adding this data from the feature service and examining it (below, using ArcGIS Online) was easy and straightforward. The downloading links above worked without a problem, as well. I salute this initiative and look forward to more data and further initiatives.  NYSGPO will continue to work with NYSERDA and CIESIN as more footprints become available as well as incorporating the publicly available Microsoft building footprints. This is another amazing manifestation of a trend we have written about in GIS in additional essays in this Spatial Reserves blog: Big Data. For more, see all of our posts on this topic: Big Data.

NYSGPO will continue to work with NYSERDA and CIESIN as more footprints become available as well as incorporating the publicly available Microsoft building footprints. This is another amazing manifestation of a trend we have written about in GIS in additional essays in this Spatial Reserves blog: Big Data. For more, see all of our posts on this topic: Big Data.

On a related data access note, many of us have grappled with sites that use outdated FTP services for years that are difficult to access in a modern web browser. This same office, the NY State GIS Program Office, reported recently that they have added https access to download files. This replaces the FTP links used in the Discover GIS NY applications (orthos.dhses.ny.gov) and in the data index services listed below. The change means the download links will work in Chrome, Edge, Firefox and Internet Explorer (IE). Users will not need to use only IE to get the download links to work. Good news for data users!

The data index services from this organization are wonderful and are listed below:

- NYS Orthoimagery: https://orthos.its.ny.gov/arcgis/rest/services/vector/ortho_indexes/MapServer [orthos.its.ny.gov]

- NYS DEM: https://orthos.its.ny.gov/arcgis/rest/services/vector/dem_indexes/MapServer [orthos.its.ny.gov]

- NYS LIDAR LAS: https://orthos.its.ny.gov/arcgis/rest/services/vector/las_indexes/MapServer [orthos.its.ny.gov]

—Joseph Kerski

How much data is out there?

As this blog is all about data, and about the advent of the truly “Big Data” world, exactly how much data are we talking about? Below is one source of information about how much data actually exists today and how much is projected to exist in the near future.

How much data exists and is projected to exist?

Aydin, O. (2021). Spatial Data Science: Transforming Our Planet [Conference presentation]. 2021 Los Angeles Geospatial Summit, Los Angeles, CA, United States.

Because our blog and book is also about encouraging people to check data sources, I would like to add that the above information came from the following: Seagate’s annual data report: https://www.seagate.com/our-story/data-age-2025/ There is an abridged version in this article from Forbes: https://www.forbes.com/sites/tomcoughlin/2018/11/27/175-zettabytes-by-2025/?sh=3fe81b4d5459.

These figures are staggering, and from these figures spring many questions: How much of the above data is geospatial data? How much is not geospatial yet, but is potentially mappable? Which data should be mapped? Take a look at the small percentage, say, of tweets that are geotagged. Should more be geotagged? What would we gain by doing so?

More importantly: What will we do with all this data? Will we be able to sort out the important from the trivial to continue to advance society in health, safety, and sustainability? How must geotechnologies evolve to remain viable in the big data world? I look forward to your comments below.

–Joseph Kerski

Reflections on the Effective Use of Geospatial Big Data article

Glyn Arthur, in a thought provoking article in GIM International, entitled “Effective Use of Geospatial Big Data“, raises several issues that have been running through the Spatial Reserves blog. The first is to point out that the “heart of any geospatial analysis system is increasingly becoming the server.” Glyn, a GIS professional with over 25 years experience, then dives into one of the chief challenges that this environment brings, namely, to deal with the increasing quantity and variety of data that the world produces. Of particular importance is emerging sensor platforms which must be incorporated into future GIS applications. The second point is the need to embrace, and not avoid, the world of big data and its benefits–but also recognize the challenges it brings. The third point is to carefully consider the true costs of the data server and decision making solution when making a purchasing decision.

Frankly, I found the “don’t beat around the bush” theme of Glyn’s article refreshing. This is evident in such statements as, “for mission-critical systems, purposely designed software is required, tested in the most demanding environments. Try doing it cheaper and you only end up wasting money.” Glyn also points out that the “maps gone digital” attitude “disables.” I think what Glyn means by this is that systems built around the view that GIS is just a digital means of doing what we used to do with paper maps will be unable to meet the needs of organizations in the future (or dare I say, even today). Server systems must move away from the “extract-transform-load” paradigm to meet the high speed and large data demands of users. Indeed, in this blog we have praised those portals that allow for direct streaming into GIS packages from their sites, such as here in Utah and here in North Dakota. The article also digs into the nuts-and-bolts of how to decide on what solution should be purchased–considering support, training, backwards compatibility, and the needs of the user and developer community. Glyn points out something that might not set well with some, but I think is relevant and needs to be grappled with by the GIS community, which is this: A weakness of Open Source software is its sometimes lack of training from people with relevant qualifications and who have a direct relationship with the original coding team, particularly when lives and property are at stake.

Glyn cites some examples from work at Luciad with big data users such as NATO, EUROCONTROL, Oracle, and Engie Ineo. Geospatial server solutions should be able to connect to a multitude of geographic data formats. The solutions must be able to publish data with a few clicks. Their data must be able to be accessed and represented in any coordinate system, especially with temporal and 3D data that includes ground elevation data and moving objects.

Glyn Arthur’s article about effective use of geospatial big data is well-written and thought-provoking.

Data Drives Everything (But the Bridges Need a Lot of Work)

A new article in Earthzine entitled “Data Drives Everything, but the Bridges Need a Lot of Work” by Osha Gray Davidson seems to encapsulate one of the main themes of this blog and our book.

Dr Francine Berman directs the Center for a Digital Society at Rensselaer Polytechnic Institute, in Troy, New York, and as the article states, “has always been drawn to ambitious ‘big picture’ issues” at the “intersection of math, philosophy, and computers.” Her project, the Research Data Alliance (RDA), has a goal of changing the way in which data are collected, used, and shared to solve specific problems around the globe. Those large and important tasks should sound familiar to most GIS professionals.

And the project seems to have resonated with others, too–1,600 members from 70 countries have joined the RDA as members. Reaching across boundaries and breaking down barriers that make data sharing difficult or impossible is one of the RDA’s chief goals. Finding solutions to real-world problems is accomplished through Interest Groups, which then create more focused Working Groups. I was pleased to see Interest Groups such as Big Data Analytics, Data In Context, and Geospatial, but at this point, a Working Group for Geospatial is still needed. Perhaps someone from the geospatial community needs to step up and lead the Working Group effort. I read the charter for the Geospatial Interest Group and though brief, it seems solid, with an identification of some of the chief challenges and major organizations to work with into the future to make their vision a reality.

I wish the group well, but simple wishing isn’t going to achieve data sharing for better decision making. As we point out in our book with regards to this issue, geospatial goals for an organization like this are not going to be realized without the GIS community stepping forward. Please investigate the RDA and consider how you might help their important effort.

Always on: The analysts are watching …

We recently came across the Moves App, the always-on data logger that records walking, cycling and running activities, with the option to monitor over 60 other activities that can be configured manually. By keeping track of both activity and idle time calorie burn, the app provides ‘ an automatic diary of your life’ .. and by implication, assuming location tracking is always enabled as well, an automatic log of your location throughout each day. While this highlights a number of privacy concerns we have written about in the past (including Location Privacy: Cellphones vs. GPS, and Location Data Privacy Guidelines Released), it also opens up the possibilities for some insightful, and real-time or near real-time, analytical investigations into what wearers of a particular device or users of a particular app are doing at any given time.

Gizmodo reported today on the activity chart released by Jawbone, makers of the Jawbone UP wristband tracking device, which showed a spike in activity for UP users at the time a 6.0 magnitude earthquake occurred in the Bay Area of Central California in the early hours of Sunday 24th August 2014. Analysis of the users data revealed some insight into the geographic extent of the impact of the quake, with the number of UP wearers active at the time of the quake decreasing with increasing distance from the epicentre.

Source: The Jawbone Blog

This example provides another timely illustration of just how much personal location data is being collected and how that data may be used in ways never really anticipated by the end users. However, it also shows the potential for using devices and apps like these to provide real-time monitoring of what’s going on at any given location, information that could be used to help save lives and property. As with all new innovations, there are pros and cons to consider; getting the right balance between respecting the privacy of users and reusing some of the location data will help ensure that data mining initiatives such as this will be seen as positive and beneficial and not invasive and creepy.

Geospatial Data Integration Challenges and Considerations

A recent article in Sensors & Systems: Making Sense of Global Change raised key issues regarding challenges and considerations in geospatial data integration. Author Robert Pitts of New Light Technologies recognizes that the increased availability of data presents opportunities for improving our understanding of the world, but combining diverse data remains a challenge due to several reasons. I like the way he cuts through the noise and captured the key analytical considerations, which we address in our book entitled, The GIS Guide to Public Domain Data. These include coverage, quality, compatibility, geometry type and complexity, spatial and temporal resolution, confidentiality, and update frequency.

In today’s world of increasingly available data, and ways to access that data, integrating data sets to create decision-making dashboards for policymakers may seem like a daunting task–much worse than that term paper you were putting off writing until the last minute. However, breaking down integration tasks into the operational considerations that Mr. Pitts identifies may help the geospatial and policymaking communities make progress toward the overall goal. These operational considerations include access method, format and size of data, data model and schema, update frequency, speed and performance, and stability and reliability.

Fortunately, as Mr. Pitts points out, “operational dashboards” are appearing that help decision makers work with geospatial data in diverse contexts and scales. These include the US Census Bureau’s “On the Map for Emergency Management“, based on Google tools and the Florida State Emergency Response Team’s Geospatial Assessment Tool for Operations and Response (GATOR) based on ArcGIS Online technology, shown here.

As we discuss in our book and in this blog, portals or operational dashboards will not by themselves ensure that better decisions will be made. I see two chief challenges with these dashboards and make the following recommendations: (1) Make sure that those who create them are not simply putting something up quickly to satisfy an agency mandate. Rather, those who create them need to understand the integration challenges listed above as they build the dashboard. Furthermore, since the decision-makers are likely not to be geospatial professionals who understand scale, accuracy, and so on, the creators of these dashboards need to communicate the above considerations in an understandable way to those using the dashboards. (2) Make sure that the dashboards are maintained and updated. If you are a regular reader of this blog, you know that we are blunt in our criticism about portals that may be well-intentioned but are out of date and/or are extremely difficult to use. For example, the US Census dashboard that I analyzed above contained emergencies that were three months old, despite the fact that I had checked the current date box for my analysis.

Take a look around at our world. We need to incorporate geospatial technologies in decision making across the private sector, nonprofit organizations, and in government, at all levels and scales. It is absolutely critical that geospatial tools and data are placed into the hands of decision makers for the benefit of all. Progress is being made, but it needs to happen at a faster pace through the effort of the geospatial community as well as key decision makers working together.

Free versus fee

In The GIS Guide to Public Domain Data we devoted one chapter to a discussion of the Free versus Fee debate: Should spatial data be made available for free or should individuals, companies and government organisations charge for their data? In a recently published article Sell your data to save the economy and your future the author Jaron Lanier argues that a ‘monetised information economy‘, where information is a commodity that is traded to the advantage of both the information provider and the information collector, is best way forward.

Lanier argues that although the current movement for making data available for free has become well established, with many arguing that it has the potential for democratising the digital economy through access to open software, open spatial data, open education resources and the like, insisting that data is available for free will ultimately mean a small digital elite will thrive at the expense of the majority. Data, and the information products derived from them, are the new currency in the digital age and those who don’t have the opportunity to take advantage of this source of re-enumeration will lose out. Large IT companies with the best computing facilities, who collect and re-use our information, will be the winners with their ‘big data‘ crunching computers ‘... guarded like oilfields‘.

In one vision of an alternative information economy, people would be paid when data they made available via a network were accessed by someone else. Could selling the data that are collected by us, and about us, be a viable option and would it give us more control over how the data are used? Or is the open approach to data access and sharing the best way forward?

Geospatial Advances Drive the Big Data Problem but Also its Solution

In a recent essay, Erik Shepard claims that geospatial advances drive the big data problem but also its solution: http://www.sensysmag.com/article/features/27558-geospatial-advances-drive-big-data-problem,-solution.html. ” The expansion of geospatial data is estimated to be 1 exabyte per day, according to Dr. Dan Sui. Land use data, satellite and aerial imagery, transportation data, and crowd-sourced data all contribute to this expansion, but GIS also offers tools to manage the very data that it is contributing to.

We discuss these issues in our book, The GIS Guide to Public Domain Data. These statements from Shepard are particularly relevant to the reflections we offer in our book: “Today there is a dawning appreciation of the assumptions that drive spatial analysis, and how those assumptions affect results. Questions such as what map projection is selected – does it preserve distance, direction or area? Considerations of factors such as the modifiable areal unit problem, or spatial autocorrelation.”

Indeed! Today’s data users have more data at their fingertips than ever before. But with that data comes choices about what to use, how, and why. And those choices must be made carefully.

Recent Comments