Data Pipelines: A new tool to import data from 3rd Party APIs, AWS, CSVs, and more

In June 2023, ArcGIS Online released with a new data integration capability, Data Pipelines. This application, currently in beta, provides a workbench User Interface to import and clean data from cloud data stores, such as AWS and Snowflake, read files such as CSVs, GeoJSON, and other formats. Once you have connected to your data, you can engineer the data to a desired state and then write the data out to a feature layer. This new feature in ArcGIS Online is central to the themes of this Spatial Reserves data blog and I trust will be of interest to our readers.

According to my colleague’s post linked here, you can:

- Connect to datasets in your external data stores, like Amazon S3 or Snowflake.

- Ingest public data that is accessible via URL, such as datasets found in open data portals or a downloadable CSV provided by your local government.

- Filter and clean your data using data processing tools, like Filter by attribute, Select fields, and Remove duplicates.

- Enhance your data by joining it with information from Living Atlas layers using the Join tool, or use Arcade functions to calculate field values using the Calculate field tool.

- Easily integrate and clean data in ArcGIS Online with an easy-to-use drag-and-drop interface

- Create reproducible, no-code data prep workflows.

As illustrated recently by my colleague Brian Baldwin, shown here, Brian pointed to the New York City 311 data set hosted on their Open Data platform, and built out a layer from it in about 2 minutes. Instead of the typical workflow from an open data site where you would have to export the data and/or have to build a Python script to do so, Brian created a data pipeline that could automatically bring the data in and even be run on a recurring schedule to keep the data up to date.

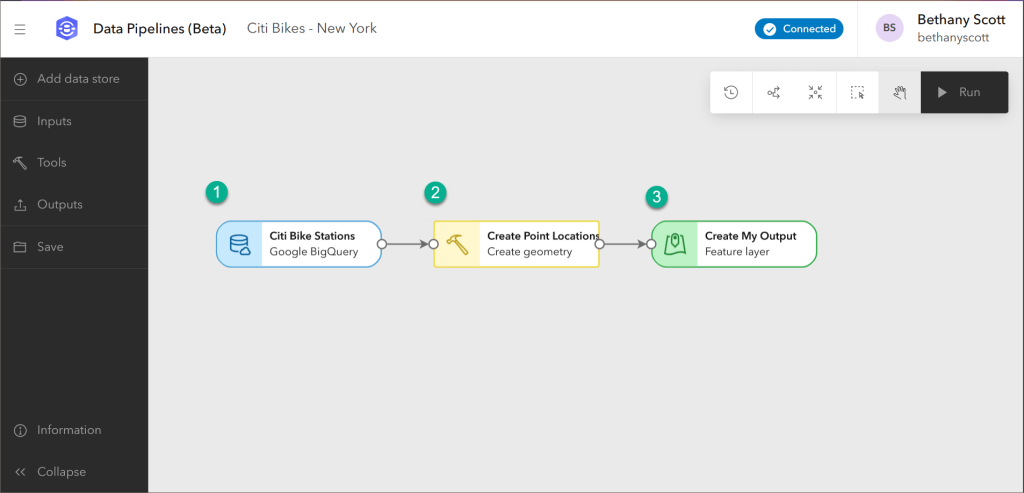

To engage the tool, in ArcGIS Online, go to the app launcher (the icon that looks like a 3×3 set of dots in the upper right of the ArcGIS Online interface), and select and launch Data Pipelines. Then, create your first data pipeline. The User Interface provides a blank canvas where you construct elements in a data model that tells the app what you want to do with the data. You would then need to, in the UI, provide the:

- Inputs–These are the connections to data sources used to read in the data you want to prepare. You can add one or multiple inputs to build your workflow. A full list of supported inputs can be found here.

- Tools–Once you’re connected to your data, you can configure tools to prepare and transform your data. For example, you can filter for certain records using queries, integrate datasets by using joins, merge multiples datasets together, or calculate a geometry field to enable location. A full list of the available tools can be found here.

- Outputs–Once your data is prepared, it can be written to feature layers. You can create a new feature layer or update existing feature layers. For more detailed information on configuring data pipeline outputs, see the output feature layer documentation.

Here is what the UI looks like. The drag and drop user experience reminds me of using Model Builder in ArcGIS Pro. On an instructional note, I believe this type of diagram and flow between the graphics fosters spatial thinking as well as GIS skills.

A view of data pipelines in use.

In the introductory blog, you can watch an introductory video. Once you are ready to get started, you can create your first data pipeline via this tutorial. The Esri Community on Data Pipelines is another great place to learn more.

I look forward to using this tool and to hearing your reactions to it.

–Joseph Kerski

This tool has been slightly renamed to ArcGIS Data Pipelines (Feb 2024). –Joseph Kerski